In the landscape of enterprise data architecture, two terms have gained prominence over the last decade: data lakes and data lakehouses. Both have emerged as powerful solutions for managing vast volumes of structured and unstructured data, but as data needs become more complex, so does the technology required to handle them. Enter the data lakehouse: a hybrid solution that promises the best of both worlds.

But what exactly sets data lakehouses apart from traditional data lakes? And why should enterprises care?

The Rise of the Data Lake

As organisations moved beyond the constraints of traditional data warehouses, data lakes became the go-to solution for storing raw data at scale. Built on platforms like Hadoop and cloud storage systems such as Amazon S3 or Azure Data Lake, data lakes allowed enterprises to ingest data in any format, structured, semi-structured, or unstructured, without the need for upfront modelling.

Advantages of Data Lakes:

- Scalability: Able to store petabytes of data cost-effectively.

- Flexibility: No strict schema requirements, ideal for varied data types.

- Speed: Quick ingestion and processing of raw data from multiple sources.

However, while data lakes enabled massive data collection, they often fell short on governance, performance, and data quality. Querying data directly from the lake using traditional BI tools proved difficult. This led to a common industry complaint: data lakes becoming “data swamps” when not properly managed.

The Emergence of the Data Lakehouse

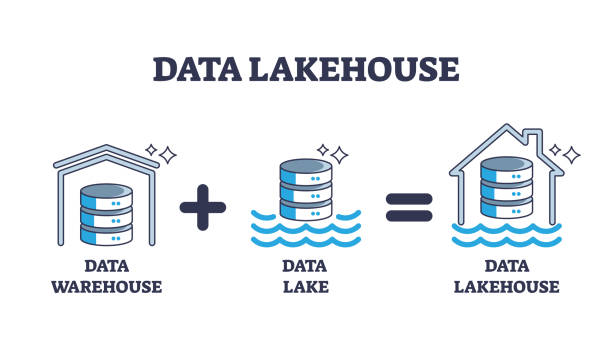

To address these limitations, the data lakehouse architecture was introduced, a convergence of the data lake’s flexibility and the data warehouse’s performance and reliability.

What is a Data Lakehouse?

A data lakehouse is a modern data architecture that combines the storage capabilities of a data lake with the transactional and analytical features of a data warehouse. It supports ACID transactions, robust governance, and schema enforcement, all while still operating on cost-effective storage layers.

Pioneered by technologies such as Databricks’ Delta Lake, Apache Iceberg, and Snowflake, the lakehouse model allows enterprises to perform real-time analytics, machine learning, and BI directly on their raw data without the need to copy it across systems.

Key Differences Between Data Lakes and Data Lakehouses

| Feature | Data Lake | Data Lakehouse |

|---|---|---|

| Storage | Object-based (e.g., S3, ADLS) | Same as data lake |

| Schema | Schema-on-read | Schema-on-write (enforced) |

| ACID Transactions | Not supported | Fully supported |

| Performance | Slower due to lack of indexing | High-performance query engines |

| Governance | Limited controls | Built-in data quality and access control |

| Use Cases | Data storage, basic ETL | Advanced analytics, ML, BI workloads |

Why It Matters for Enterprises

The move from data lakes to data lakehouses is more than a technological upgrade. It’s a strategic evolution. Today, enterprises are under pressure to extract real-time insights, scale AI/ML initiatives, and ensure compliance with increasingly strict regulations.

A lakehouse architecture allows businesses to:

- Reduce data silos by keeping all workloads in a single platform.

- Speed up analytics by avoiding costly data duplication.

- Lower TCO by leveraging open formats and cloud-native infrastructure.

- Improve governance with audit trails, access control, and versioning.

Use Cases in the Real World

- Retail: Analysing customer behaviour, real-time inventory, and personalised recommendations from a single lakehouse platform.

- Finance: Running compliance checks and fraud detection models directly on transaction logs without needing separate systems.

- Healthcare: Combining structured patient records with unstructured clinical notes for deeper research insights and predictive diagnostics.

For enterprises navigating the complexities of big data, the data lakehouse represents a new era of unified analytics. It offers the elasticity and flexibility of data lakes while delivering the structure and performance of data warehouses.

As AI, machine learning, and real-time decision-making become integral to business success, investing in a data architecture that supports all three is not just smart, it’s essential.

The shift from data lakes to data lakehouses isn’t just a buzzword trend, it’s a practical step forward for enterprises that want to do more with their data, faster and smarter.

Leave a Reply