Introduction

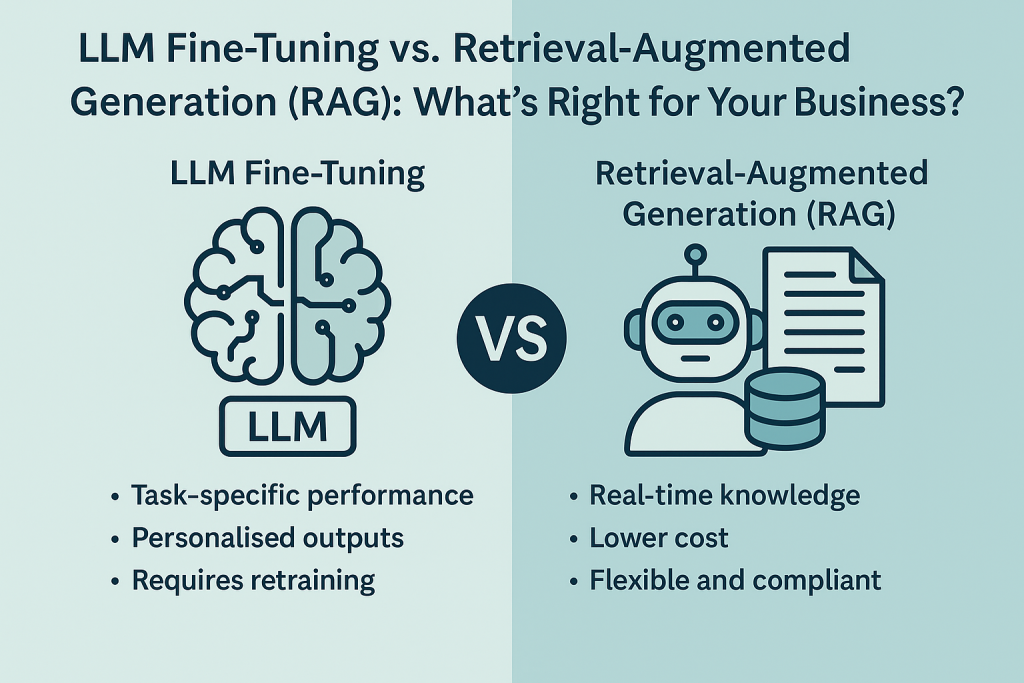

As enterprises adopt generative AI, particularly large language models (LLMs), a key decision arises: Should you fine-tune the model or implement Retrieval-Augmented Generation (RAG)? This choice isn’t just about architecture. It affects everything from performance and cost to compliance and agility.

Understanding both options’ strengths, trade-offs, and business implications is crucial for making the right move in your AI strategy.

What Is LLM Fine-Tuning?

Fine-tuning involves taking a pre-trained model (like GPT or LLaMA) and training it on a specific dataset relevant to your organisation.

Pros:

- Task-specific performance: Improved accuracy on domain-specific tasks such as legal summarisation, technical support, or medical documentation.

- Personalised tone and context: Models adapt to brand voice, workflows, or user language.

- Autonomous: Once trained, it doesn’t need a constant connection to an external knowledge base.

Cons:

- Expensive and resource-intensive: Fine-tuning large models demands GPU time, engineering effort, and ongoing maintenance.

- Model drift: As your business data evolves, fine-tuned models can become outdated unless re-trained.

- Governance risk: Tightly coupling knowledge into the model can complicate data auditing, privacy controls, and versioning.

Fine-tuning works best with static, high-quality proprietary data and specific, repeatable tasks.

What Is Retrieval-Augmented Generation (RAG)?

RAG adds a “retrieval” layer to LLMs. Instead of storing all knowledge in the model’s weights, RAG connects the model to an external knowledge base (e.g., a vector database or document store). When asked a question, the system pulls relevant context in real time and generates a response.

Pros:

- Real-time, dynamic updates: Perfect for domains where information changes frequently (e.g., finance, policy, tech support).

- Lower cost and effort: No need to re-train the model, just update the knowledge base.

- Compliance-friendly: Data sources remain transparent, auditable, and manageable.

Cons:

- Retrieval quality is critical: If your search pipeline is weak, the LLM’s response will suffer.

- Latency: More steps can mean slower performance compared to direct generation.

- Complex architecture: Requires integration with databases, embeddings, and search pipelines.

RAG shines in environments that demand flexibility, traceability, and continuous data flow, ideal for enterprises with broad knowledge needs across departments.

Key Differences at a Glance

| Feature | Fine-Tuning | RAG |

|---|---|---|

| Use Case Focus | Narrow, task-specific | Broad, dynamic knowledge access |

| Updatability | Requires retraining | Update docs instantly |

| Cost | High compute and dev time | Lower infrastructure burden |

| Explainability | Opaque sources | Transparent document trails |

| Compliance & Governance | Harder to audit | Easier to control and version |

| Scalability | Limited to training data | Scales with expanding knowledge base |

Emerging Hybrid Approaches

Increasingly, forward-thinking organisations are blending both techniques:

- Fine-tune for structured tasks: Internal workflows, document templates, chatbots for FAQs.

- RAG for exploratory tasks: Knowledge workers, researchers, and customer support.

This hybrid strategy helps maximise accuracy where needed and maintain flexibility elsewhere.

Business Considerations: Choosing the Right Approach

To decide what’s right for your organisation, ask:

- Is your data stable and static, or always evolving?

- Do you need traceability and transparency for legal or compliance reasons?

- Is real-time accuracy more valuable than consistent tone or structure?

- What is your team’s capacity to maintain AI infrastructure over time?

If you’re scaling knowledge access across teams, RAG offers faster time-to-value. If you’re refining a core workflow, fine-tuning might pay off.

Conclusion

There’s no one-size-fits-all answer in the LLM era. But understanding the trade-offs between fine-tuning and RAG helps align your AI investments with business goals, not just technical possibilities.

The future of enterprise AI may not be a choice between the two, but rather a smart combination tailored to the rhythm of your operations.

Leave a Reply